Generative AI – Will it be a friend or a foe? Unregulated Artificial Intelligence that is empowered with thinking and autonomy may be scarier than you know.

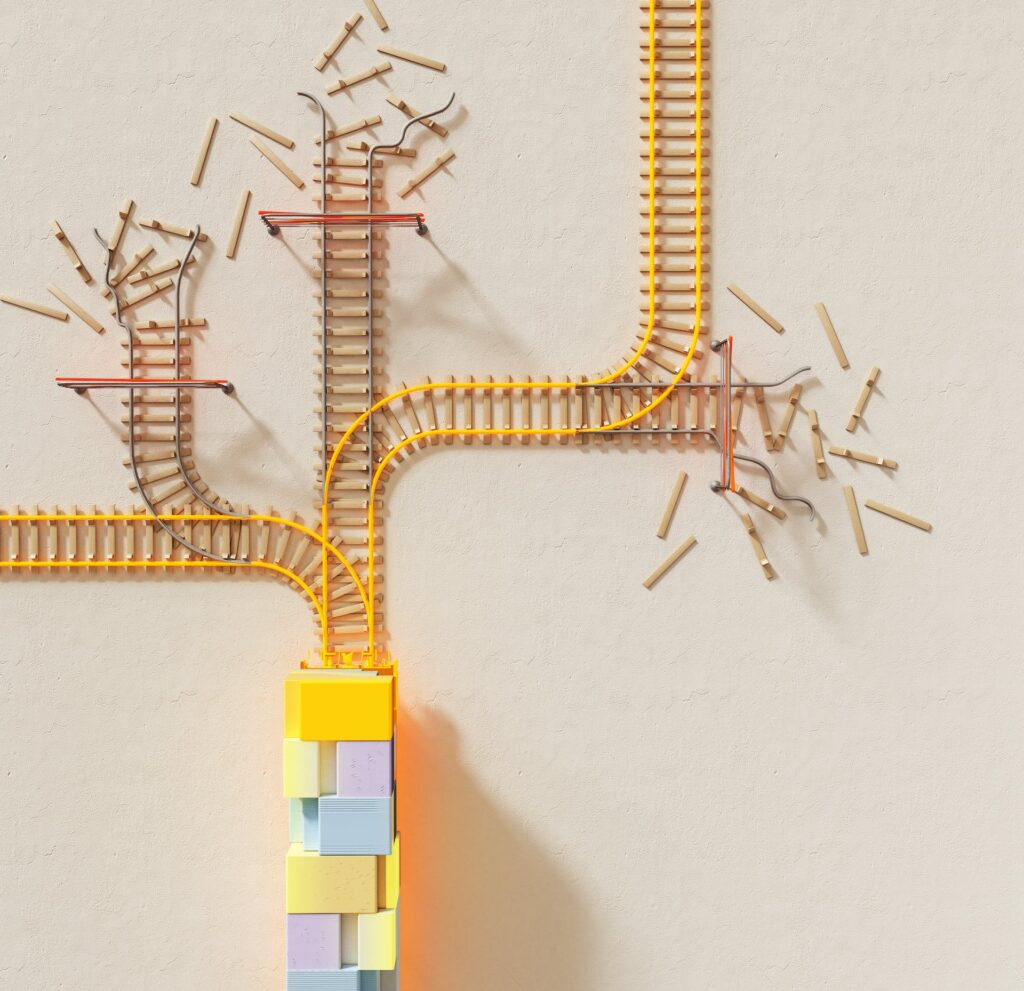

The potential of Generative AI to revolutionize various aspects of our world is undeniable. It has the ability to drive innovation in products, companies, industries, and economies. However, alongside its unique capabilities comes a significant concern for security and privacy.

The remarkable creative power of Generative AI has introduced a whole new set of challenges in terms of safeguarding sensitive information. Enterprises now find themselves grappling with important questions;

- Who owns the training data, the model, and the generated outputs?

- Does the AI system itself have rights to future data it creates?

- How can we ensure the protection of these rights?

- How do we govern data privacy when utilizing Generative AI?

The list of concerns goes on.

Unsurprisingly, many enterprises are proceeding with caution. The blatant vulnerabilities in security and privacy, coupled with a reluctance to rely on temporary solutions, are driving some organizations to ban the use of Generative AI tools altogether. However, there is hope.

Confidential Computing

Confidential computing, a novel approach to data security to help guarantee data protection during usage and maintains code integrity, holds the key to addressing the complex and serious security concerns associated with large language models (LLMs). It enables enterprises to fully harness the power of Generative AI without compromising safety. But before we delve into the solution, let’s first examine why Generative AI is uniquely susceptible to vulnerabilities.

Generative AI has the remarkable ability to assimilate vast amounts of a company’s data or a knowledge-rich subset, resulting in an intelligent model that can generate fresh ideas on demand. This is undeniably enticing, but it also poses immense challenges for enterprises in terms of maintaining control over proprietary data and complying with evolving regulations.

Protecting training data and models must be the utmost priority. Merely encrypting fields in databases or rows on a form is no longer sufficient. The concentration of knowledge and the subsequent generative outcomes, without robust data security and trust controls, could inadvertently transform Generative AI into a tool for abuse, theft, and illicit activities.

Indeed, employees are increasingly inputting confidential business documents, client data, source code, and other regulated information into LLMs. As these models are partially trained on new inputs, a breach could result in significant intellectual property leaks. Furthermore, if the models themselves are compromised, any content that a company is legally or contractually obligated to protect could be exposed. In a worst-case scenario, theft of a model and its data could enable a competitor or a nation-state actor to replicate and steal that information.

Is there a way forward for Generative AI?

The stakes are incredibly high. A recent study recently reported that a significant number of organizations have experienced privacy breaches or security incidents related to AI, with over half of them stemming from data compromises by internal parties. The emergence of Generative AI can be used to escalate these numbers leading to chaos in the industry.

In addition, enterprises must keep pace with evolving privacy regulations when adopting Generative AI. Across industries, there is a profound responsibility and incentive to remain compliant with data requirements. For instance, in healthcare, AI-powered personalized medicine holds immense potential for enhancing patient outcomes and overall efficiency. However, healthcare providers and researchers must handle substantial volumes of sensitive patient data while ensuring compliance, presenting a new dilemma.

To overcome these challenges, and the many more that will undoubtedly arise, Generative AI requires a new security foundation. Safeguarding training data and models must be the top priority. Mere encryption of fields or rows is no longer enough. In situations where Generative AI outcomes are used to make critical decisions, it becomes crucial to demonstrate the integrity of the code and data, as well as the trust it inspires. This is essential for compliance and potential legal liability management.

A robust mechanism is needed to provide comprehensive protection for the entire computation and its runtime environment.

Thank you for joining us on this journey of exploration and discovery at ABX Associates. We’re thrilled to have you as part of our vibrant community. As we delve into the realms of Industry, commerce and lifestyle, we strive to bring you valuable insights, expert perspectives, and the latest trends.

Like, Subscribe and Share!

Your interaction means the world to us. Together, let’s create a space where curiosity meets knowledge, and where discussions flourish. Thank you for being a part of the ABX journey. Let’s learn, grow, and inspire together!